“All problems in computer science can be solved by another level of indirection“ Butler Lampson (Or Abstraction as some people refer to it as)

Over the last few posts, I have been covering how to unit test various topics, in this post I will cover Linq to SQL. I’ve been thinking about this for a while, but never had chance to post about it. Various people have spoken about this before, but I thought I would give my view. I’m going to discuss how to unit test systems involving Linq to SQL, how to mock Linq to SQL, how this will come into play with the new ASP.net MVC Framework and how TypeMock might have the answer.

Scenario

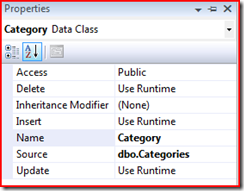

For this post, I decided to implement a small part of a shopping cart. You can return a list of categories, a list of products for each category and the product itself.

Unit Testing Linq to SQL

In my previous post, I discussed how to unit test Linq to XML and mentioned how it really isn’t that different to any other system. Linq to SQL is similar in the fact that you can easily ignore the underneath implementation (Linq) when unit testing, however I’m going to focus more on how to decide the system and how it all should hang together.

Like in any Linq to SQL implementation you will need a data context. While you could unit test this implementation, I always prefer to test it as part of a higher layer as its generated code to give the tests more context and more meaningful for the system. The most important part of the system will be how we actually access the DataContext, retrieve the data and make sure that it can be effectively unit tested.

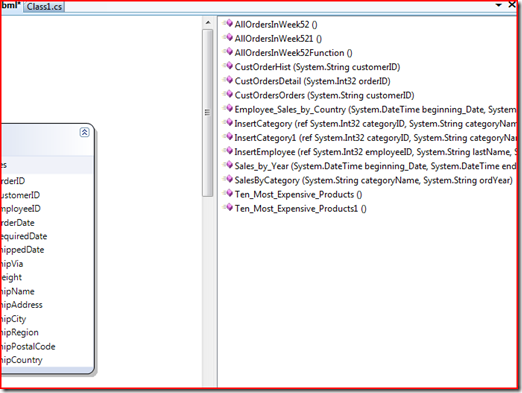

In my system I will have my HowToUnitTestDataContext generated by the Linq to SQL Designer. I will then have a ProductController which will talk to my DataContext and return the generated object. Unlike with SubSonic, we can return the designer generated objects as they are just POCO (Plain Old C# Objects). I will then have a set of unit tests targeting the ProductController. We are not doing any mocking at this point and all of our unit tests will hit the database.

First test, create a data context and ensure it has a connection string. This ensures everything is setup correctly.

[Test]

public void CreateDataContext_ConnectionString_ReturnsDataContextObject()

{

HowToUnitTestDataContext db = ProductController.CreateDataContext();

Assert.IsNotNull(db);

Assert.IsNotNull(db.Connection);

}

All the method does is initialise a data context and return it to the caller. The next test requests a list of all the categories from the database. However, because we haven’t populated anything yet it should just return an empty list.

[Test]

public void GetCategories_NoContent_ReturnEmptyList()

{

List categories = ProductController.GetCategories();

Assert.IsNotNull(categories);

Assert.AreEqual(0, categories.Count);

}

We now have a base structure in place and can start filling in the details. The following test first inserts a category into the database (code is in the solution which you can download at the bottom of the post). It then requests all the categories from the database and ensures that what we returned was correct. We then use the MbUnit RollBack feature to ensure the database is left in the same state as it was before the test. The rollback works perfectly with Linq to SQL!

[Test]

[RollBack]

public void GetCategories_SimpleContent_ReturnPopulatedList()

{

InsertCategory();

List categories = ProductController.GetCategories();

Assert.IsNotNull(categories);

Assert.AreEqual(1, categories.Count);

Assert.AreEqual(“Microsoft Software”, categories[0].Title);

}

The code for GetCategories() is a simply Linq to SQL statement which returns a generic list.

public static List GetCategories()

{

HowToUnitTestDataContext db = CreateDataContext();

var query = from c in db.Categories

select c;

return query.ToList();

}

The next important test is the one which returns product information. Here, we use a tested GetCategory method to return a particular category. We then insert a temp product into the database for that category, meaning that we now have a known database state to work with. The test then simply verifies that when given a category we can return all the products in the database for it.

[Test]

[RollBack]

public void GetProductsForCateogry_ValidCategoryWithProduct_PopulatedList()

{

InsertCategory();

Category c = ProductController.GetCategory(“Microsoft Software”);

InsertProduct(c);

List products = ProductController.GetProductsForCategory(c);

Assert.AreEqual(1, products.Count);

Assert.AreEqual(“Visual Studio 2008”, products[0].Title);

}

The implementation of this method is a little bit more complex as it joins a ProductCategories table to return the products within the particular category.

public static List GetProductsForCategory(Category c)

{

HowToUnitTestDataContext db = CreateDataContext();

var query = from p in db.Products

join pc in db.ProductCategories on p.id equals pc.ProductID

where pc.CategoryID == c.id

select p;

return query.ToList();

}

The final method is to return a particular product based on its ID. It works in a similar fashion to the previous methods.

[Test]

[RollBack]

public void GetProductByID_ValidProductID_ReturnProductID()

{

InsertCategory();

Category c = ProductController.GetCategory(“Microsoft Software”);

InsertProduct(c);

List products = ProductController.GetProductsForCategory(c);

Assert.AreEqual(1, products.Count);

Product p = ProductController.GetProduct(products[0].id);

Assert.AreEqual(p.Title, products[0].Title);

}

In the implementation we then just return a single product using a lambda expression.

public static Product GetProduct(int productID)

{

HowToUnitTestDataContext db = CreateDataContext();

Product product = db.Products.Single(p => p.id == productID);

return product;

}

That pretty much covers unit testing basic Linq to SQL. Other parts of the system, such as the business layer or UI layer, can then talk directly to the ProductController to return all the information. However, this doesn’t offer anything if you want to mock out Linq to SQL.

Mocking Linq to SQL

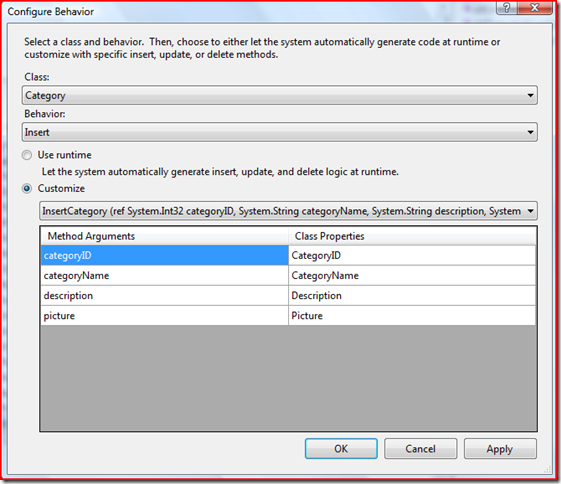

Unit testing Linq to SQL isn’t very difficult, however mocking Linq to SQL is a different beast. As with SubSonic, the best approach to take is to abstract away from your database. In this case, I am going to add an additional layer in between my ProductController and my DataContext called LinqProductRepository which can then be mocked.

My first set of tests are focused on testing the LinqProductRepository which talks to my DataContext and as such my database. The tests are very similar to the above tests for ProductController. I always test this against the database to ensure that it will work effectively when its in production/live, with mock objects you can never have the same level of confidence.

LinqProductRepository m_ProductRepository = new LinqProductRepository();

[Test]

[RollBack]

public void GetCategoryByName_NameOfValidCategory_ReturnCategoryObject()

{

InsertCategory();

Category c = m_ProductRepository.GetCategory(“Microsoft Software”);

Assert.AreEqual(“Microsoft Software”, c.Title);

Assert.AreEqual(“All the latest Microsoft releases.”, c.Description);

}

To give you an idea of the implementation, the GetCategory method looks like this:

public Category GetCategory(string categoryTitle)

{

using (HowToUnitTestDataContext db = CreateDataContext())

{

Category category = db.Categories.Single(c => c.Title == categoryTitle);

return category;

}

}

In order to make the ProductRepository mockable it is required to implement an interface. The interface is very simply, as shown:

public interface IProductRepository

{

List GetCategories();

Category GetCategory(string categoryTitle);

List GetProductsForCategory(Category c);

Product GetProduct(int productID);

}

We now have a fully implemented and tested ProductRepository so we can create the ProductController. To start with, in my ProductControllerTests I setup the variables and the [Setup] method for each test. This ensures that we have our MockRepository (via RhinoMocks) to hand, a copy of our mocked IProductRepository together with our stub category and product. These two objects are simple well known objects (for the system) which we will return from our mocked methods. I’m using parameter injection to set the mocked repository on the ProductController which will be used during the tests. By using a parameter we can have a default implementation for our production code but a way for our test code to injection the mock object.

MockRepository m_Mocks;

IProductRepository m_ProductRepos;

Category m_MockedCategory;

Product m_MockedProduct;

[SetUp]

public void Setup()

{

m_Mocks = new MockRepository();

m_ProductRepos = m_Mocks.CreateMock();

ProductController.ProductRepository = m_ProductRepos;

m_MockedCategory = MockCategory();

m_MockedProduct = MockProduct();

}

We can then write our unit tests based on this information which will be similar to our previous units tests as they are implementing the same requirements. Within this test, we setup our expected return for the method GetCategories on our ProductRepository, this simply uses C# 3.0 Collection Initialises to create a list with one item, the stub category. We can then execute our test/asserts against the ProductController to view it is all linked correctly and working as expected.

[Test]

public void GetCategories_SimpleContent_ReturnPopulatedList()

{

using (m_Mocks.Record())

{

Expect.Call(m_ProductRepos.GetCategories()).Return(new List { m_MockedCategory });

}

using (m_Mocks.Playback())

{

List categories = ProductController.GetCategories();

Assert.IsNotNull(categories);

Assert.AreEqual(1, categories.Count);

Assert.AreEqual(“Microsoft Software”, categories[0].Title);

}

}

The ProductController simply passes the call onto our ProductRepository, which is set using a parameter in our test Setup.

private static IProductRepository m_ProductRep = new LinqProductRepository();

public static IProductRepository ProductRepository

{

get { return m_ProductRep; }

set { m_ProductRep = value; }

}

public static List GetCategories()

{

return ProductRepository.GetCategories();

}

This allows us to use the ProductController with a mock object. ProductController could be/do anything and by going via the LinqProductRepository we can use mock objects to save us accessing the database. In our real system, we would use the real tested LinqProductRepository object.

ASP.net MVC and Linq to SQL

Recently there has been a lot of buzz around the new ASP.net MVC framework the ASP.net team are releasing (CTP soon). If you haven’t read about this, Scott Guthrie has done a great post on the subject.

However, Scott Hanselman did a demo of the MVC framework at DevConnections and has posted the source code online here – DevConnections And PNPSummit MVC Demos Source Code. Demo 3 and 4 is about TDD and uses mock objects and Linq to SQL.

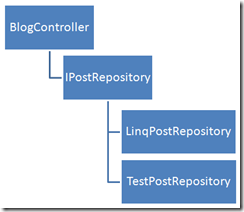

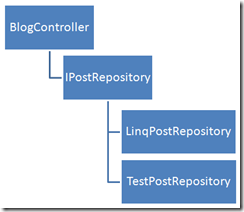

The layout is as follows:

The BlogController talks to a IPostRepository object which is either LinqPostRepository or TestPostRepository. They use a Stub object instead of a mock object, but the architecture is the same as my system. I would just like to say I didn’t just copy them 🙂

From this demo code, it looks like the way to mock your data access layer will be to use this approach.

Mocking the DataContext

The thing which first struck me about this was why couldn’t we just mock the DataContext itself instead of messing around with Repositories and Controllers. The first answer was that it didn’t implement an interface, but that’s a simple fix. The second problem is that the data context returns everything as System.Data.Linq.Table<>, and this cannot be mocked. It does implement an ITable interface, however you cannot cast another object implementing ITable to Table.

Ayende, creator of Rhino Mocks, wrote a post called Awkward Testability for Linq for SQL which covers why it cannot be mocked. Its a shame the Linq team didn’t think about TDD and Mocking the repository as it would have made a big difference to the system design. Maybe this is a lesson for anyone creating an API at the moment – think about testability! I think the ASP.net team have realised this.

Mocking with TypeMock

That all said, it looks like TypeMock might be able to provide an answer. TypeMock is a mocking framework, however is extremely powerful as it can mock any object within your system, it doesn’t care about the implementation or if there is an interface available. It can simply mock anything. I will be looking at TypeMock more over the next few weeks, but before then visit their site. It’s not free (30 day trail available), but if you need to do this type of mocking then it really is your only solution and it can greatly increase your test coverage and your test suite as a whole.

You can read the initial ideas over on their blog – Eli Lopian’s Blog (TypeMock) » Blog Archive » Mocking Linq – Preview. Going in depth on TypeMock and Linq deserves its own post, however I couldn’t resist posting some code now.

Below, we have a test which mocks out querying a simple list. Here, we have a list of customers and a dummydata collection. Our actual Linq query is against the m_CustomerList, however TypeMock does some ‘magic’ under the covers and tells the CLR that the query should just return the dummyData. As such, instead of just returning the one customer record, we are returning the two dummy data records. How cool!!!

public class Customer

{

public int Id { get; set; }

public string Name { get; set; }

public string City { get; set; }

}

[Test]

[VerifyMocks]

public void MockList()

{

List m_CustomerList = new List {

new Customer{ Id = 1, Name=”Dave”, City=”Sarasota” },

new Customer{ Id = 2, Name=”John”, City=”Tampa” },

new Customer{ Id = 3, Name=”Abe”, City=”Miami” }

};

var dummyData = new[] {new {Name=”fake”,City=”typemock”},

new {Name=”another”,City=”typemock”}};

using (RecordExpectations recorder = RecorderManager.StartRecording())

{

// Mock Linq

var queryResult = from c in m_CustomerList

where c.City == “Sarasota”

select new { c.Name, c.City };

// return fake results

recorder.Return(dummyData);

}

var actual = from c in m_CustomerList

where c.City == “Sarasota”

select new { c.Name, c.City };

Assert.AreEqual(2, actual.Count());

}

But it gets better! Given our original ProductController (no repositories, mocks, fakes, stubs) we can tell TypeMock that for this statement, always return the dummyData. As such, the ProductController never hits the database.

[Test]

[VerifyMocks]

public void MockDataContext()

{

Category category = new Category();

category.id = 1;

category.Title = “Test”;

category.Description = “Testing”;

List dummyData = new List { category };

using (RecordExpectations r = RecorderManager.StartRecording())

{

// Mock Linq

List mocked = ProductController.GetCategories();

// return fake results

r.Return(dummyData);

}

List categories = ProductController.GetCategories();

Assert.AreEqual(1, categories.Count);

Assert.AreEqual(“Test”, categories[0].Title);

Assert.AreEqual(“Testing”, categories[0].Description);

}

Feel free to download the solution and debug the methods to see it for yourself, I think its cool.

Summary

In summary, I hope you have found this post useful and interesting. I’ve covered how to unit test your Linq to SQL code and how you go about mocking Linq to SQL. I then finished by giving you a quick look at what TypeMock can offer. It is possible to mock Linq to SQL, however it’s not as simple as it could be. If you have any questions, please feel free to contact me.

Download Solutions – TestingLinqToSQL.zip | TestingLinqToSQLMock.zip | TestingLinqToSQLTypeMock