Until recently I considered Cisco to be a company that focuses primarily on data centres and networking hardware. Last week and attending Cisco Live event made me change my mind. Times are changing! Cisco is changing.

The last three years have introduced dramatic changes to the infrastructure ecosystem. Container technology has become accessible. Scheduling platforms like Mesos are in use outside of the top SV companies. Automation of deployment process is possible with amazing tools like Terraform and Ansible. The style for microservices, or component-based architectures, has been born. Many developers will simply consider micro services to be another buzzword, a new term to think about known ways of architecting systems. While this is true, the latest conversations have relighted the risks of monolithic systems. They introduced new questions about deploying these components as globally distributed services.

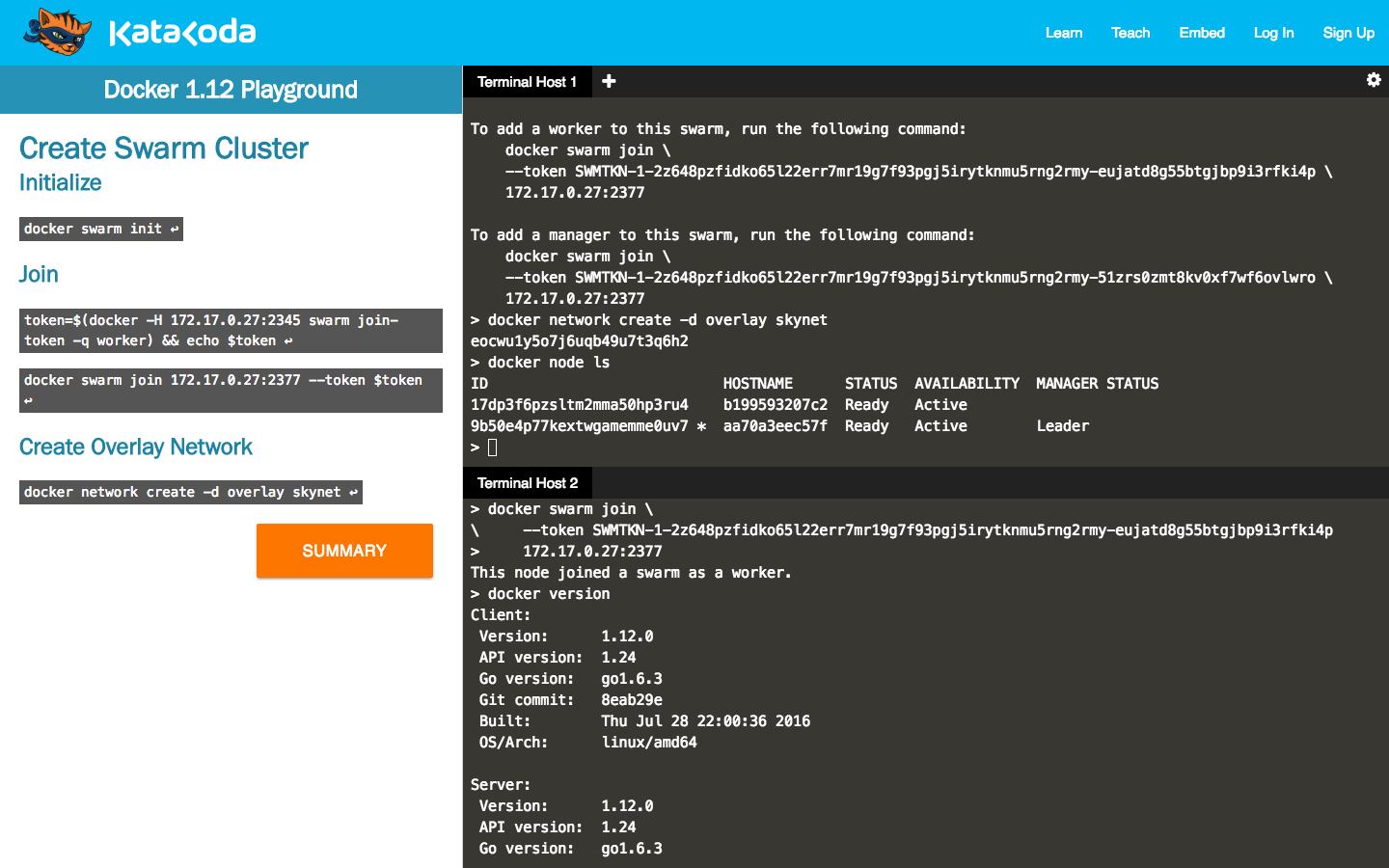

These questions are causing many sleepless nights in developers world. The learning curve to understand all the moving parts is steep. Some days I believe to the point where it’s almost vertical. I’m currently working on solving this problem with Katacoda. It’s an interactive learning platform dedicated to helping developers understand this rapidly changing world.

Cisco and their partners are creating tools to answer similar questions. They consider issues like deployment distributed services and utilisation of available open source tooling. During Cisco Live the majority of the conversations gathered around the Cisco Cloud team. As the solution with support from Container Solutions, Remember to Play and Asteris, Cisco has built Mantl.

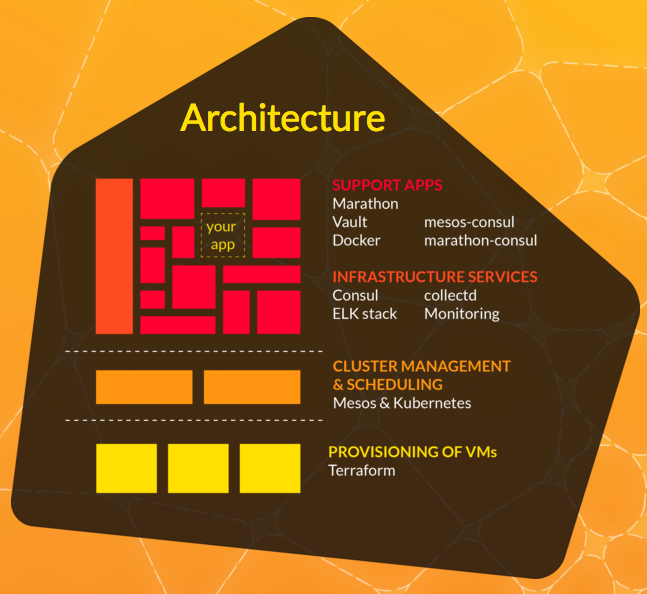

According to Cisco Mantl “is a modern platform for rapidly deploying globally distributed services“. From my perspective, it’s creating a best of breed platform. It combines the finest open source systems making them simple to deliver as end-to-end solutions. This aim is achieved without any vendor lock-in and by releasing the product under the Apache License. This way the platform fits into “Batteries Included But Removable” mindset.

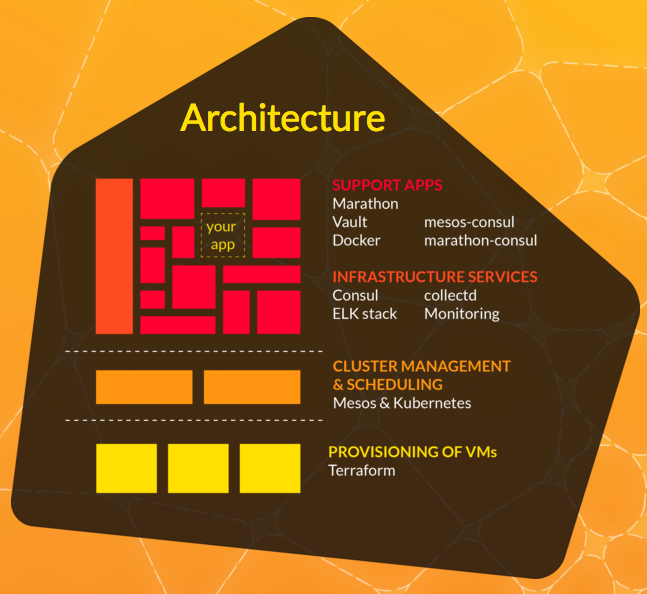

Mantl is the glue to connect services and infrastructure together. Out of the box, it manages the provisioning of infrastructure and software using code artefacts. It manages deployments using Ansible and Terraform. This means supports the major cloud platforms. This continues by deploying your software onto a Mesos cluster, software defined networks via Calico, service discovery using Consul, and logging with the ELK stack, to name a few. All managed under source control, exactly where it should be.

The architecture of building on top of existing tooling is significant. By not re-inventing the wheel, Mantl becomes an appealing platform as a combination of your existing go-to tooling.

Container Solutions, presented an impressive example on how to work with the platform. They have put together case study based system collecting data for localised fog predictions. Who doesn’t like IoT, big data and drones?

The resulting architecture like something we built for a previous client that involved predicting faults. In theory, if we had used Mantl then we’d have saved significant time and investment on our infrastructure work. We used the ELK stack, Consul and many other tools Mantl is based on meaning it would have felt similar. We’d also have gotten the benefits of running on top of Mesos/Marthon for free. As a result, the team could have spent more energy on data analytics instead of infrastructure.

Mantl provides an interesting future and direction. The container ecosystem is still young with no clear winner. Mantl’s approach feels sane as it’s agnostic to the underlying tooling. As a result, it has the potential to win the hearts and minds of many users.

But it’s not going to be an easy task. One of the main challenges I foresee is shielding users from the underlying complexity as the platform grows. It’s important to keep the initial setup a simple and a welcoming process. Another aspect would be educating users in using Kibana, Marthon, Vault, etc. once it’s up and running.

Ensuring the platform is easy to get started with is the key. I’m seeing too many platforms attempting long sales approach that’s putting developers off. Developers don’t want to jump through hoops to start playing with technologies. One of the worse things companies can do is force them to join a “Sales Call” to see if they’re suitable. The great aspect of Mantl is it’s open to everyone. By using familiar tooling like Ansible, Terraform and Vagrant, the platform allows you to get started quickly. Other “Control Planes” should take note.

Mantl wasn’t the only interesting project discussed during the conference. Shipped is a hosted CI/CD/PaaS platform that uses Mantl under the covers. Mantl provides deployment of your application onto a Mesos cluster in AWS and other cloud providers as it’s completely cloud agnostic.