Recently, Red Gate sent me on the ISEB Foundation Testing certification course. I had heard a lot of reports about the certification from fellow testers, but the course was only two days, in house and I had some free time so I thought why not – I might learn something.

Now I have my mark, I feel I should ‘share’ my experience and my view on the certification.

Theory and Terminology

I understand that this is a foundation course, however it still includes a lot of terminology which accounts for a large percentage of the course. The aim is to have a consistent terminology across the industry and make sure everyone is reading from the same page. All of the terminology used is listed in the glossary. Personally, knowing the correct terminology isn’t actually that useful in the real world, for example knowing what the term ‘failure’ means is useless if you cannot identity it.

In general, the course was focused more on the theory side of testing, which was mainly based on theory in the perfect world where you have a fully testable spec. The advice was that if the spec wasn’t testable, alert the business that you can’t do any work until it is testable – I’m not sure how many companies would welcome this demanding stance. As a result of focusing on specification driven testing, it didn’t go into much depth about Exploratory Testing, apart from define the term, which I feel is much more important and generally more applicable.

Ignorant of new development processes

Most of the testing process discussion was based around the V-Model. The V-Model is great, its a visual presentation of a sequence of steps, on the left you have tasks, while on the right you have verification steps. For example, when you receive your detailed testable specification, you create your acceptance tests to be used to verify the system. However, it starts to get a bit murky when you haven’t got the stages down the left, or when your doing Test Driven Development (according to the model, you design, code, test).

While the V-Model doesn’t define what development methodology it applies to, it sits perfectly in the waterfall development lifecycle. I would have liked to see more of a focus on agile development, mentioning iterative and Rational Unified Process (RUP) doesn’t count.

We should be encouraging agile processes, how testers fit into the process and how they can work more closely with BA and Devs to ensure testable requirements and testable stories.

Agile is not just a fad, a new-age way which will never catch on! It should be taking a much more positive stance in our teaching.

“Just learn it, don’t argue with it”

For me, this is the single biggest problem with certification. Even if you feel, or know, it is wrong – don’t argue, just learn it so you can pass and have your name on a piece of paper. This is encouraging the MCSE 2000 style certifications, where all you need is a brain dump of all the terminology and sample questions to be able to pass.

This is not a very effective way to teach, and it is definitely not an effective way to learn. Generally with software development, I have learnt by having in-depth discussions about the topic in question, the rights and wrongs, best practices and alternative approaches in order to gain a good understanding. Someone saying that this is the only way you can do something is not very constructive.

However, you do just need to ‘learn’ the material in order to pass the exam. During my scrum master training, we had really good discussions about how scrum works in the real world. By using the material as a guide, and not the be all and end all, and not having to worry about passing an exam, we was able to dig deep into certain areas and have a discussion, as a result taking much more away from the two days.

“Principle 6: Standardized tasks and processes are the foundation for continuous improvement and employee empowerment” (The Toyota Way)

Maybe we (I) have this all wrong? Maybe the aim of testing certification isn’t to teach you the latest and great techniques, but to provide you with a set of standardised tasks and processes to use as a foundation – it is after all, a foundation certification. I’m currently reading The Toyota Way and this is similar to principle 6, have standardised tasks and processes to allow for improvement instead of reinventing the wheel each time. It would make more sense.

If this is the case, then where is the continuous improvement and updating of the course material to take into account new processes, tools and best practices. By standardising these new ideas, we could improve them to create new best practices improving the industry in general. While the content is updated, is appears to be very static in terms of ideas.

The future for testing certifications?

What is the future for testing certification? From the numbers taking the examination, it looks like testing certification is here to stay. I think there are two initial approaches to improve the certification. The first is that the foundation course doesn’t have an exam and instead follows a similar approach to the certified scrum master training to allow for discussion and sharing of ideas. With no exam, there is not as much red tape, there is no need for writing and marking papers allowing the content to be updated with more flexibility. The course could be changed to include the new ideas, sharing the best practices and improving the industry in general.

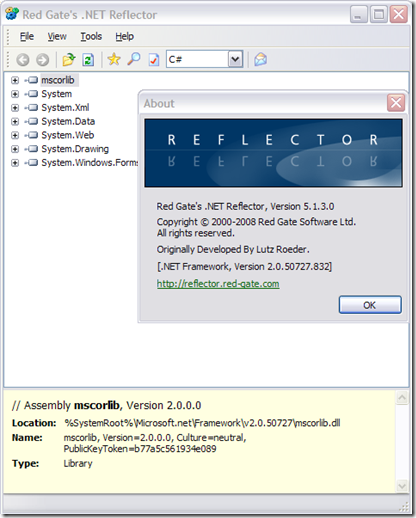

In terms of training, it would be great to see a similar course aimed at testers, which developers have with “Nothing but .Net” from Jean-Paul Boodhoo. A serious deep dive into different testing techniques, tools and approaches. Along side this, conferences have their role to play. This year, DeveloperDay in the UK has a number of different testing based sessions, all of which are real-world ‘take back to your office and use’ subjects. A number of testing conferences I have seen are more focused on the academic side and papers on testing, while interesting do not apply to improving your work today.

I wonder if the practitioner exam is any better?