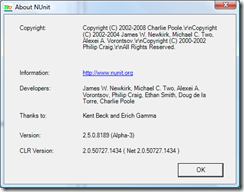

It’s always interesting to see how the different unit testing frameworks approach automated testing, each framework has it’s own style, approach and feature set with everyone having their own view on which framework everyone should use. One of the more (you could say most) popular .Net framework is NUnit and over the past few months Charlie has been working on v2.5 so I thought it was about time I took a look.

It’s always interesting to see how the different unit testing frameworks approach automated testing, each framework has it’s own style, approach and feature set with everyone having their own view on which framework everyone should use. One of the more (you could say most) popular .Net framework is NUnit and over the past few months Charlie has been working on v2.5 so I thought it was about time I took a look.

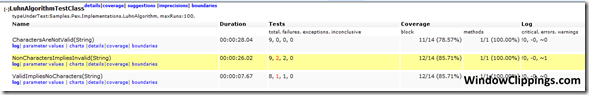

Parameterised Tests (Documentation can be found here Parameterized Tests)

Similar to XUnit and MbUnit, NUnit 2.5 includes the concept of parameterised tests. RowTest was included in 2.4.7, however additional attributes have now been included.

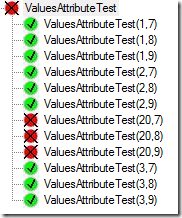

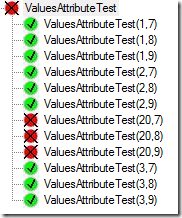

ValuesAttribute

The first new attribute allows for combinational style testing, this is where two sets of inputs are combined to create each possible test combination. In certain situations useful – always good to have in the toolbox, however generally I wouldn’t need to use this on a day-to-day basis. MbUnit has had this for a while, however I prefer the syntax of this approach, which I think is a similar syntax to MbUnit V3.

To implement this, you add the ValuesAttribute, which takes an object array for the test values, before the method parameter.

[Test]

public void ValuesAttributeTest([Values(1,2,3, 20)] int v, [Values(9,8,7)] int v2)

{

Assert.IsTrue(v < v2);

}

When NUnit loads this up, they create a combination of the test values, as a result we have 12 tests created. If we had another parameter of the test, for example with 3 possible values, then we would have another possible combination and we would have 36 tests. As a result, you can very quickly build up a good selection of test cases.

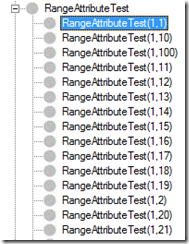

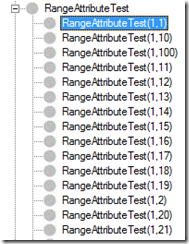

RangeAttribute

Similar to the ValuesAttribute, however instead of you defining the values, you define the range of possible values which NUnit then creates tests for. You define the range (min, max) and NUnit does the rest. The values are then combined in a combinational style.

[Test]

public void RangeAttributeTest([Range(1,100)] int v, [Range(1,100)] int v2)

{

Assert.IsTrue(v < v2);

}

The test creates produces then looks something like this:

RandomAttribute

Similar to the two previous attributes, Random produces a set of random input. You can either give it a count of the inputs to use, as I have done with the first attribute which produces 10 random values, while in the second parameter I provide the min and max range for the random values together with the amount of data to produce.

[Test]

public void RandomAttributeTest([Random(10)] int v, [Random(1, 50, 10)] int v2)

{

Assert.IsTrue(v < v2);

}

However, I’m not sure how useful testing against random data would actually be?

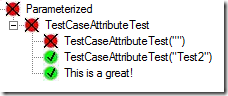

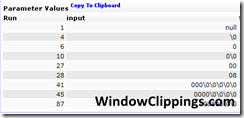

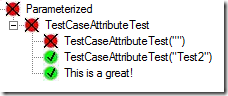

TestCaseAttribute

This attribute is similar to RowTest, however as part of the TestCase you can set your own TestName which I think is actually really useful.

[TestCase(“Test1”, TestName = “This is a great!”, Description = “My first test”)]

[TestCase(“Test2”)]

[TestCase(“”)]

public void TestCaseAttributeTest(string test)

{

Console.WriteLine(test);

Assert.IsNotEmpty(test);

}

The TestCase is then reflected In the GUI and report. The first two tests use the test method name plus their parameter inputs, while the third uses the TestName property we set on the TestCaseAttribute.

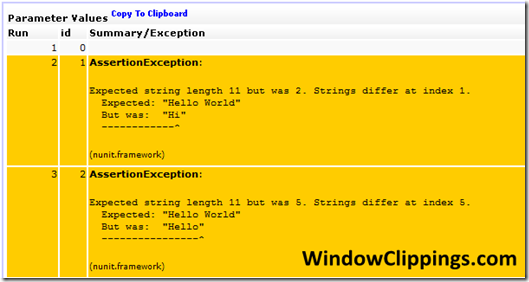

Also as part of the TestCaseAtribute, you are able to provide the Result. Generally, the expected result is passed in as a parameter and verified within the test itself. With this approach you have the result as part of the TestCase itself, with the test returning it’s result which NUnit then compares to decide if the test has passed. This does make more sense as the parameters of the test as then really test inputs with the test output being verified as an output, a small difference however it is more logical.

[TestCase(“Test1”, TestName = “This is a great!”, Description = “My first test”, Result = 5)]

public int TestCaseAttributeTest(string test)

{

Console.WriteLine(test);

return test.Length;

}

This approach to testing has a lot of potential use cases and opportunities as it really increases test case (code) reuse and test maintenance.

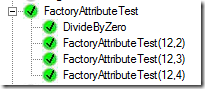

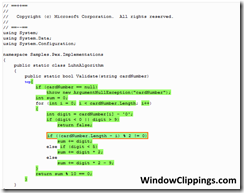

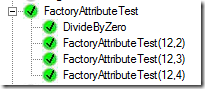

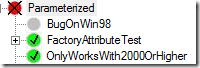

TestCaseFactoryAttribute

Similar to MbUnit’s FactoryAttribute, you define a class which yield returns each TestCaseData object. It has the advantage of using a fluent interface to define all the different properties, such as results and the test name. While I have hardcoded the 4 test cases here, there is nothing stopping you dynamically creating these.

[Test,Factory(typeof(MyFactoryClass))]

public int FactoryAttributeTest(int a, int b)

{

Console.WriteLine(a / b);

return a/b;

}

public class MyFactoryClass

{

[TestCaseFactory(typeof(int), typeof(int))]

public static IEnumerable TestCases

{

get

{

yield return new TestCaseData(12, 3).Returns(4);

yield return new TestCaseData(12, 2).Returns(6);

yield return new TestCaseData(12, 4).Returns(3);

yield return new TestCaseData(0, 0)

.Throws(typeof(DivideByZeroException))

.WithName(“DivideByZero”)

.WithDescription(“An exception is expected”);

}

}

}

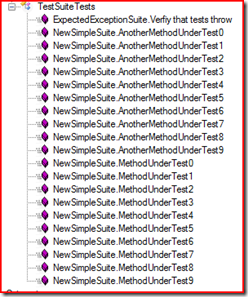

The TestCases then look like this:

New Assertions

Assert.Throws

Simple, straight forward. Does the method call throw can exception?

[Test]

public void ThrowException()

{

Assert.Throws(throwEx);

}

public void throwEx()

{

throw new Exception(“Test”);

}

Assert.DoesNotThrow

Simpler approach, Assert that the method does not throw any exceptions when it is called.

[Test]

public void doesNotThrowExTest()

{

Assert.DoesNotThrow(doesNotThrowEx);

}

public void doesNotThrowEx()

{}

Others

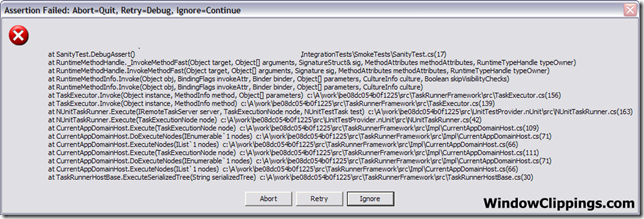

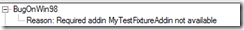

RequiredAddinAttribute

This is extremely useful if you are writing your own NUnit addins, as it is very important that the addin is available before you attempt to execute your tests. After adding attributes about the addins you require,

[assembly: RequiredAddin(“MyTestFixtureAddin”)]

[assembly: RequiredAddin(“MyTestAddin”)]

[assembly: RequiredAddin(“MyDecoratorAddin”)]

If they are not available and you execute the test, it will fail but will actually tell you the reason why.

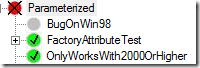

PlatformAttribute

Finally, there is a new PlatformAttribute. This attribute defines which platforms the test is allowed to run on, really useful if you have issues with a certain target platform, or if you have a set of tests only targeting a platform, such as mono.

[Test]

[Platform(Exclude=”Win98,WinME”, Reason = “This only works on Windows 2000 and above”)]

public void OnlyWorksWith2000OrHigher()

{

Assert.IsTrue(Environment.OSVersion.Version.Major > 5);

}

[Test]

[Platform(Include = “Win98,WinME”)]

public void BugOnWin98()

{

Assert.IsTrue(Environment.OSVersion.Version.Major > 5);

}

The list of possible platforms can be found here: http://nunit.org/index.php?p=platform&r=2.5

When the test is executed on an invalid platform, the test is skipped.

This post has summarised all of the interesting bits of the new Alpha release. In future releases, I expect to see some changes going forward before the final 2.5 release. It’s nice to see NUnit taking the different approaches from the different frameworks and incorporating them with their own approach, hopefully v3 will have some really interesting concepts. The main feature missing at the moment are data driven test attributes, such as pulling test cases from CSV or SQL Server, but a framework has an additional extension point to make this attribute easier to write. After this I think most of the missing features would be more suitable in NUnit 3.0.

Release Notes: http://nunit.com/index.php?p=releaseNotes&r=2.5

Download: http://nunit.com/index.php?p=download